How TELUS Digital leverages autonomous quality control agents for improved AI training data

Every model’s performance ceiling is defined directly by the quality of the data used to test and train it. As a trusted AI training data partner with over 20 years of field-tested expertise, we understand the issues related to sub-optimal data. Without high-quality data, AI models can produce biased results, waste valuable resources, miss key opportunities and can silently erode your business reputation and competitive advantage. This is why we treat quality control (QC) processes, tools and expertise as architectural principles that are engineered into our training data pipelines and not as a post-processing step. One of the ways we accomplish this is through automated QC agents. Read on to discover how we’ve adopted a sophisticated human-AI collaborative system in our processes and its impact on efficiency, production time and more.

Manual quality control challenges in frontier data production pipelines

Data validation methods are evolving to account for highly specialized frontier datasets that involve complex, nuanced and multi-step reasoning. These advanced datasets, such as those containing intricate legal analyses or advanced mathematical proofs, present unique challenges that go beyond the capabilities of traditional QC methods. While human intelligence and expert reviews remain crucial, issues like process latency, subjectivity and inconsistency can hamper scale in data production when dealing with these complex datasets. These challenges include:

Cost and scalability constraints

- Sourcing, training and compensating subject matter experts (SMEs) for comprehensive data review is prohibitively expensive and only scales linearly with additional human resources.

- Despite rubrics, human reviewers have subjective interpretations leading to inconsistent quality standards across datasets.

- Internal teams may lack the specialized, doctoral-level expertise required for accurate validation in highly specialized domains.

Velocity and feedback bottlenecks

- Expert validation of complex entries creates massive pipeline bottlenecks, directly conflicting with the need for high-quality data at speed.

- Large-scale pipelines create lengthy review queues, preventing timely interventions and allowing continuous error propagation across task batches. This is due to the absence of a timely feedback loop where AI trainers continue to repeat mistakes until notified through a review.

- Delayed feedback loops result in high rejection rates and extensive rework cycles that stall data delivery for mission-critical model training.

Introducing the QC agent for live, programmatic feedback

To overcome these challenges, TELUS Digital adopted the LLM-as-a-jury approach to develop our sophisticated human-AI collaborative system: the QC agent. At its core, this system leverages a panel of LLM evaluators composed of heterogeneous models that act as impartial judges. This multi-model approach effectively evaluates complex datasets while overcoming inherent single-model LLM biases. This includes:

- Intra-model bias (tendency to favor its own responses),

- Position bias (favoring answers in specific positions),

- Knowledge bias (over-reliance on pre-trained knowledge instead of provided context) and

- Format bias (performing optimally only with specific prompt formats).

Each evaluator model independently scores a given output, with individual scores pooled together through a voting function to ensure more balanced and accurate assessments.

The three-pillar process of the QC agent

Our proprietary QC agent operates on a structured, multi-stage process built on three pillars:

1. Atomic decomposition: A complex problem is difficult to evaluate monolithically. The QC agent breaks down the expert's submission into its smallest logical, evaluable components or "atoms." For example:

- Math proof: Instead of just judging the final answer, the LLM analyzes each individual step of the proof. Is the application of theorem A in step 2 correct? Is the algebraic manipulation in step 4 valid?

- Legal reasoning: The LLM deconstructs the analysis into its core parts: the identification of the legal principle, the application of that principle to the facts of the case and the logical soundness of the conclusion.

By breaking down the problem, evaluation becomes objective, granular and far more accurate.

2. Real-time feedback loop: The QC agent's most transformative feature is that it delivers immediate, interactive feedback during data generation, evolving QC from a final checkpoint into a continuous collaborative process. This real-time validation prevents error propagation and reduces time wasted on flawed data paths before they compound.

3. Intelligent quality gating: The QC agent acts as an automated, intelligent checkpoint.

- Based on a predefined set of quality standards and rubrics, the LLM "judges" the decomposed parts of the submission.

- If the data point fails to meet the required threshold — for example, it contains a logical fallacy, a factual error or deviates from formatting standards — it is automatically "gated" and is sent back to the expert with specific, actionable feedback for revision.

- This ensures that only data that has passed a rigorous, consistent and automated check ever reaches the final stages of review or, ultimately, the customer.

Implementation and workflow

At TELUS Digital, our QC agent is fine-tuned to comprehend project-specific objectives and autonomously evaluate submissions against complex, multi-faceted QC rubrics. We train the model with a "gold" reference dataset containing few-shot, in-context examples of valid and invalid prompt, response and rationale triples. The prompt is structured with natural language instructions defining what constitutes good or bad output, enabling the agent to act as a real-time validation layer providing instantaneous, parameter-level feedback.

Implementation involves three key phases:

- Schema definition: Translating project guidelines into AI-ready QC rubrics.

- Model selection and fine-tuning: Choosing and fine-tuning specialized model cohorts using curated, high-quality datasets.

- Validation and deployment: Testing against validation sets and deploying as platform plugins.

The workflow is designed to be intuitive and user-friendly as follows:

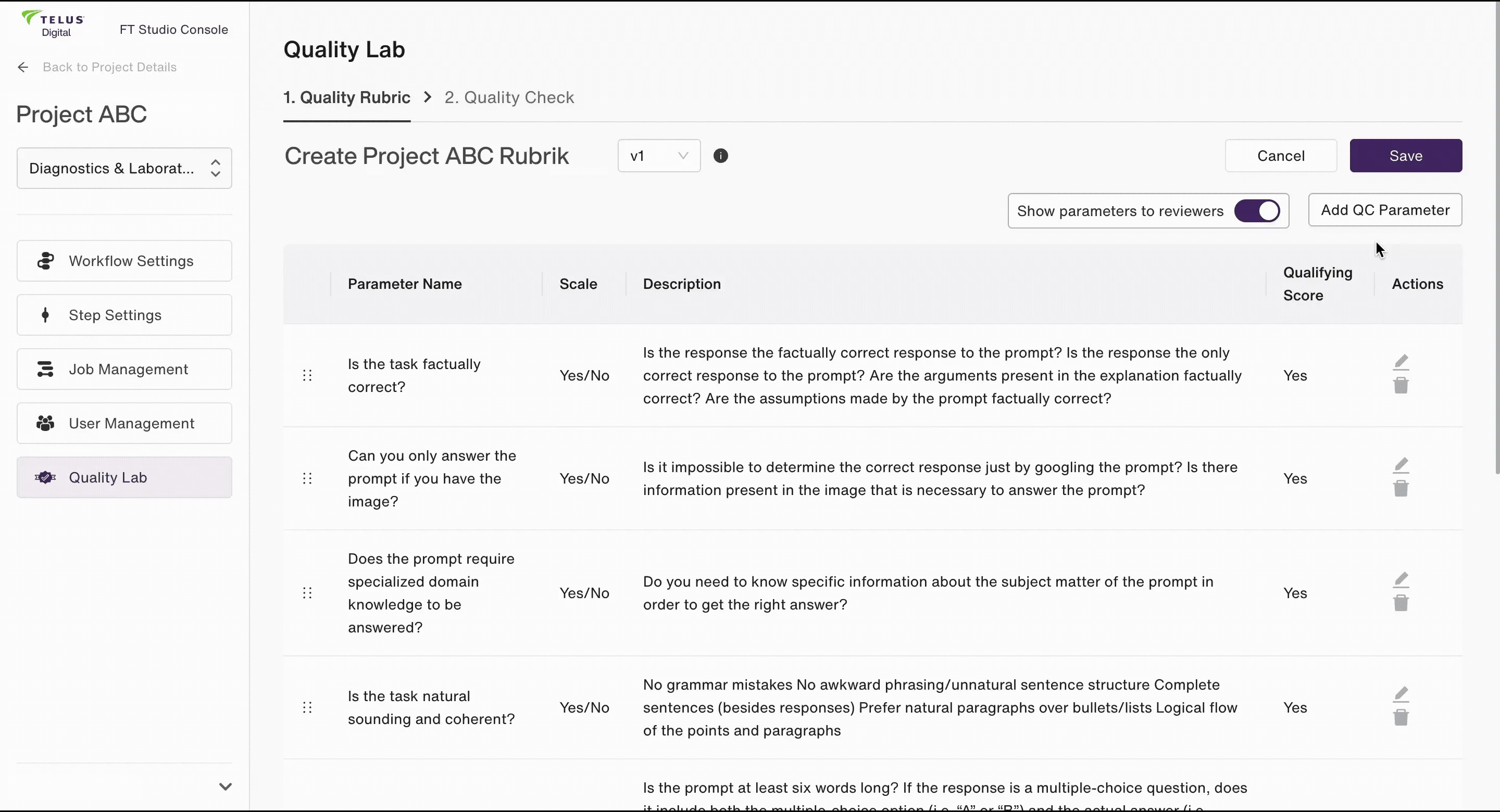

QC rubric setup: Project managers define QC parameters in "Quality Lab" on Fine-Tune Studio, our proprietary generative AI training platform.

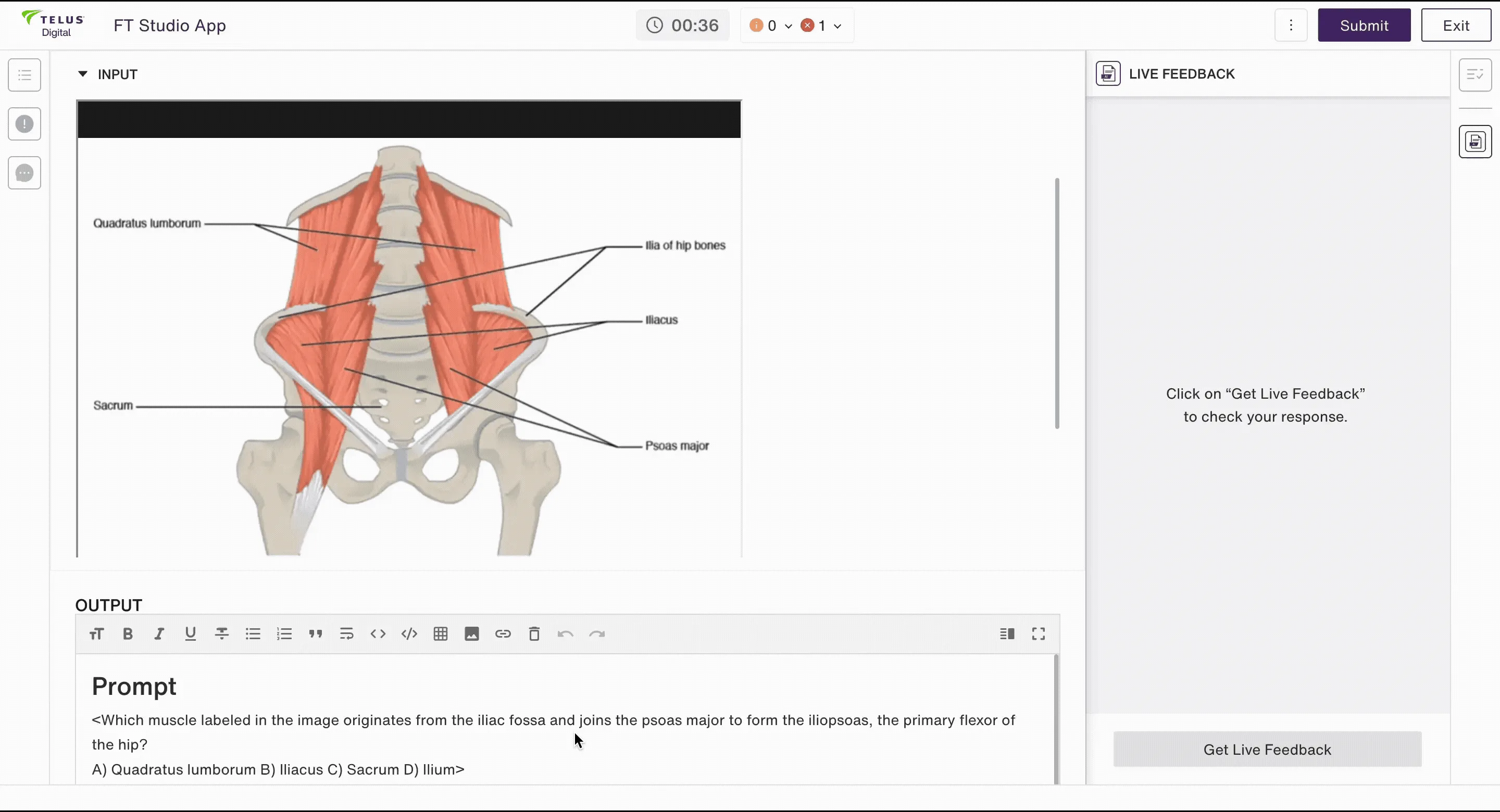

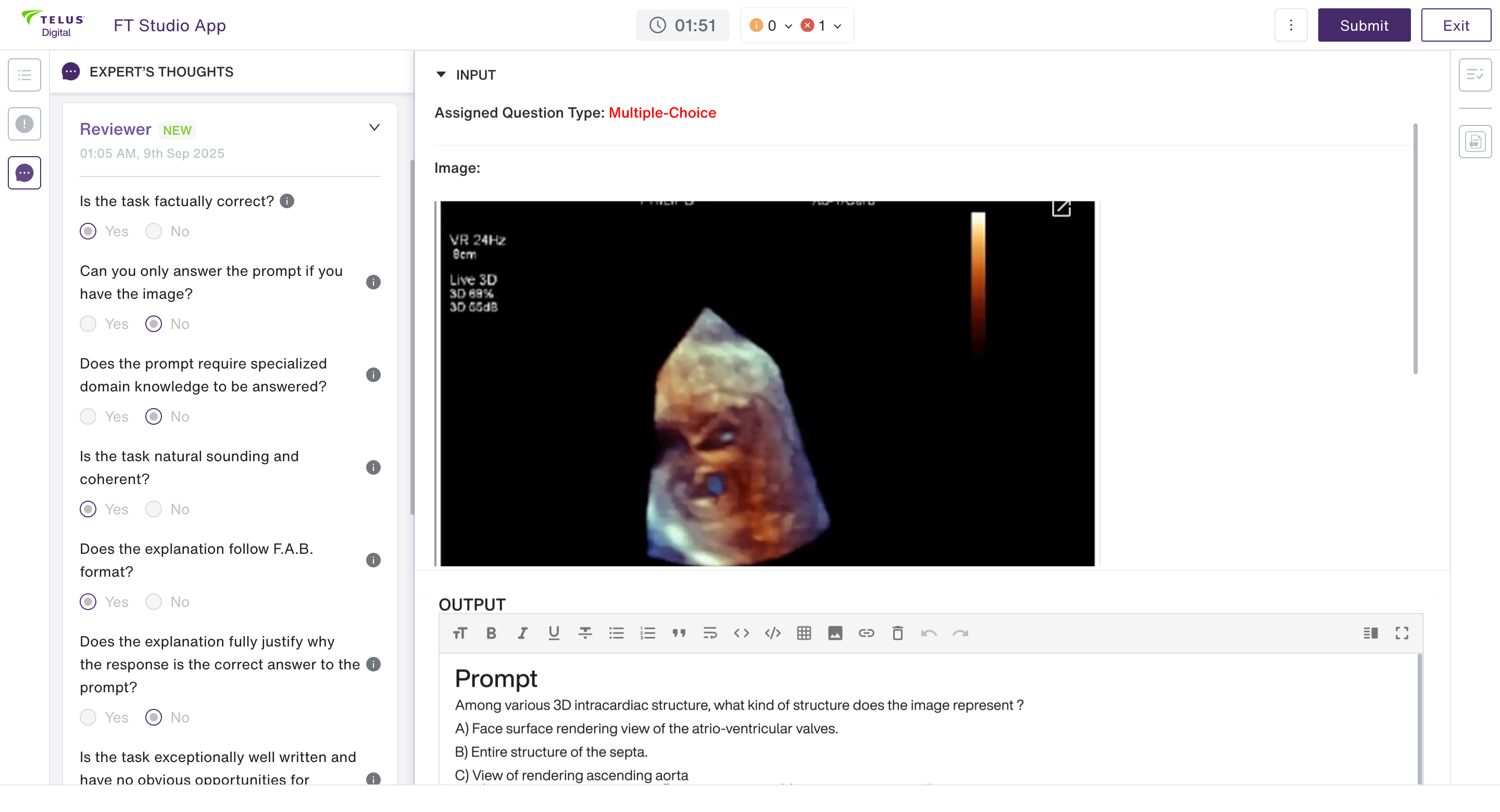

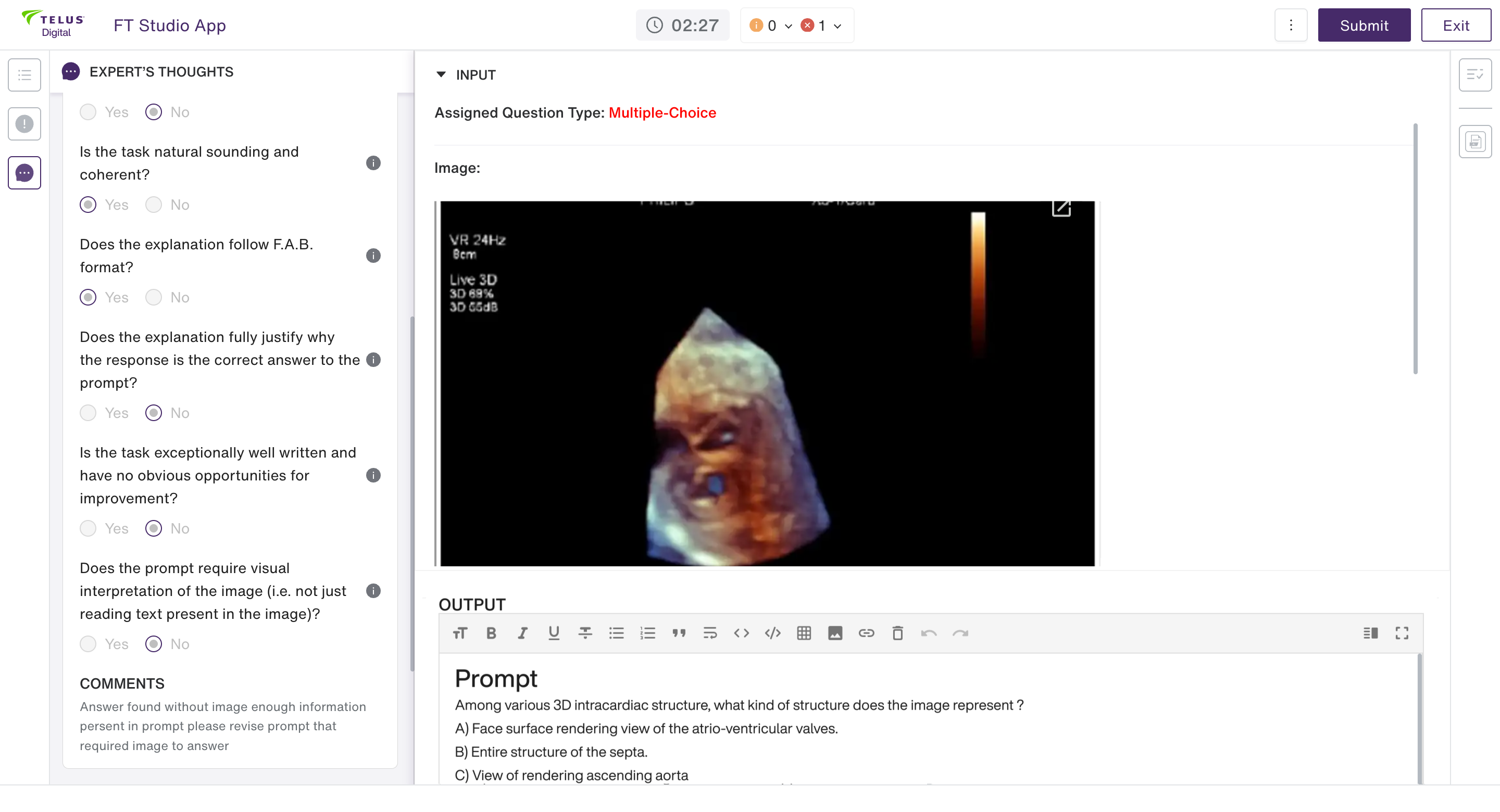

Live feedback: Data creators trigger on-demand validation via "Get Live Feedback".

Iteration on feedback: Creators receive structured feedback within seconds for immediate improvement.

This approach shifts human expertise from large-scale manual validation to exception handling and rubric improvements, effectively utilizing them for setting higher quality standards. While the QC agent provides powerful automation, we maintain a human-in-the-loop approach where quality control experts review tasks before finalization, ensuring the synergy of AI-driven scale and focused expert oversight delivers superior data quality.

The essential guide to AI training data

Discover best practices for the sourcing, labeling and analyzing of training data from TELUS Digital, a leading provider of AI data solutions.

Strategic impact and key benefits

The QC agent framework has radically transformed our approach to frontier datasets, including:

- Accelerated data velocity: Minimized review cycles lead to faster production of high-quality, complex data.

- Enhanced quality and consistency: Automated, rubric-based checks ensure uniform and high standards across entire datasets.

- Cost efficiency: Reliance on large pools of human reviewers for the initial, time-consuming stages of QC is reduced

- Expert empowerment: Through instant feedback, QC agents act like a co-pilot and help our experts catch mistakes early, refine their reasoning and avoid errors. By proactively working toward achieving the best quality from the get go, the quality of work submitted to human reviewers gets higher.

- Reduced error propagation: Instant feedback reduces the iteration loop from hours or days to seconds, preventing the propagation of user errors.

- High-throughput, scalable QA: Consistent quality assurance across massive datasets is achieved without a proportional increase in human resources.

[Callout:] In one project, tasks processed by the QC agent achieved a 95% and 85% pass rate against stringent client submission criteria in two separate batches. Furthermore, the agent drove an ~7% increase in final delivery throughput, effectively converting a pipeline bottleneck into an accelerator.

Compounding quality benefits on Fine-Tune Studio

The Fine-Tune Studio platform allows for many configurable QC touchpoints. Workflows can be constructed with multiple, modular QC plugins (e.g., spell/grammar checks, plagiarism detection, AI-detection in response generation). The QC agent compounds these touchpoints with an agentic feedback loop to further reduce errors and increase batch quality percentages.

With our platform, model developers have complete control over the human-in-the-loop process. You can customize the review workflow, including the number of review stages and the specific metrics to evaluate against.

Here's how you can tailor the process:

- QC agent only: Use our QC plugins and LLM-as-a-jury agent to automate the review process entirely.

- In-house SMEs: Leverage our TELUS Digital subject matter experts for review.

- Internal teams: Conduct reviews using your own internal teams.

- Hybrid approach: Use any combination of the above options.

Once the QC system is deployed on our platform, it becomes a set-and-forget process. As new data streams in, it gets labeled and reviewed by QC agents. If it meets your predefined criteria, it's routed to the appropriate subject matter experts for review, ensuring only high-quality, vetted data for your model training.

Advance your complex training data needs with programmatic quality

The QC agent represents a fundamental shift from post-mortem quality control to real-time quality assurance, allowing us to scale the production of frontier datasets without compromising on quality or cost-effectiveness. We are able to deliver data quality at scale, velocity and lower cost by automating complex quality control.

Contact us to explore how our QC agent can be integrated into your AI development workflow. Let's build the future of AI with quality at its core.