Agentic AI: Evolution and evaluation from cognitive foundations

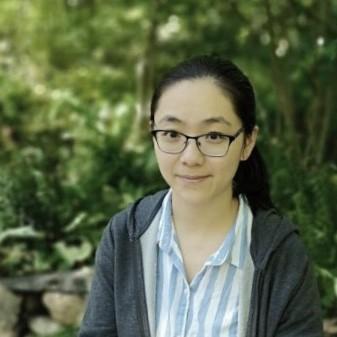

Lu Lu

AI Solution Architect

Agentic AI models — AI agents that can autonomously plan and act — are emerging as powerful tools for problem solving. However, assessing their capabilities and limitations requires evaluation methods that extend beyond those designed for conventional models. This article outlines a comprehensive conceptual approach to evaluating agentic AI systems, covering comparisons with conventional models, current assessment challenges, evaluation approaches that decompose an agent's capabilities into specific sub-areas and emerging future directions. The goal is to help agentic AI practitioners systematically evaluate these models and assess their readiness for real-world applications.

Study the science of art. Study the art of science. Develop your senses — especially learn how to see. Realize that everything connects to everything else.

-Leonardo da Vinci

The current surge in agentic AI builds upon a long-standing concept. As defined by Russell and Norvig (2000), an agent is “anything that can be viewed as perceiving its environment through sensors and acting upon that environment through actuators.” However, the integration of large language models (LLMs) into agents introduces capabilities that go far beyond earlier AI paradigms. Today’s agentic boom is largely driven by the fact that LLMs can generate reasoning tokens that support planning, reflection, memory and (at least partially) diagnostics of an agent’s capabilities that used to be a black box. Since LLMs can internally simulate reasoning steps, they enable a new type of action — cognitive action, where reasoning and reasoning over reasoning (a.k.a. meta reasoning) become part of the agent’s behavior.

This extends the classical view of agents in a fundamental way: the environment that an agent perceives or acts upon is no longer just external — it can take place internally through simulated reasoning, where the agent generates, evaluates and refines thoughts before taking action in the outside world. In this expanded view, the agent not only reacts to the world but thinks within it. These internal reasoning processes can directly inform and improve the agent’s external actions. As a result, what the agent can perceive and what it can do are vastly expanded. By leveraging external tools (e.g., APIs, databases), LLM agents can interact with other systems, and through internal reasoning, they can dynamically plan, self-correct and generalize across tasks.

This combination of internal cognition and external action represents a major shift toward general intelligence, a concept first introduced by Spearman (1904). It is broadly defined as “the capacity to reason, plan, solve problems, think abstractly, comprehend complex ideas and learn both quickly and from experience” (Arvey et al, 1994). Spearman’s notion of a single underlying “g factor” was later refined into a more detailed, hierarchical framework through the Cattell–Horn–Carroll (CHC) Theory.

Figure 1. Carroll's three-stratum theory of cognitive abilities

Figure 1. Carroll's three-stratum theory of cognitive abilitiesIn this model (Figure 1, adapted from Carroll 1993), general intelligence stays at the top layer (Stratum III), representing the broad mental capability that governs more specialized domains (Stratum II) that are further broken down into measurable skills (Stratum I). The multi-level structure of human intelligence maps very well to the artificial functional capabilities of agentic AI models powered by LLMs. For instance:

- Fluid intelligence aligns well with the agent’s capability to reason over novel situations, adapt to unseen tasks or handle unexpected errors;

- Crystalized intelligence mirrors the agent’s pretrained or retrieved knowledge;

- General memory and learning corresponds to the agent’s in-context learning, session-based memory or external knowledge corpus;

- Broad visual and auditive perception can be closely related to the agent’s multimodal capabilities;

- Broad retrieval ability can be associated with the agent’s ability to retrieve information and access tools;

- Broad cognitive speediness and processing speed ties to the agent’s response time, attention control, planning efficiency and task parallelism.

This layered understanding of intelligence provides a valuable lens for evaluating LLM-powered agentic AI models. Building on the CHC framework and its mapping to agent capabilities, we can model LLM-powered agents in an end-to-end structure. Figure 2 presents a conceptual decomposition of an LLM-powered agent’s cognitive pipeline. It shows how core capabilities interrelate with each other and how the agent perceives its environment, processes that information and ultimately acts upon it within a continuous feedback loop.

Figure 2. A conceptual model of agentic AI capabilities

Figure 2. A conceptual model of agentic AI capabilitiesAt the base of the model, perception allows an agent to interpret signals from its environment, including various forms of multimodal inputs. This is supported by long-term memory, which enables the agent to access and retrieve stored knowledge. Building on this foundation, agents perform reasoning and use short-term memory, including session history, to synthesize information and execute intermediate reasoning steps. The agent’s planning and tool use emerge as higher-level functions, enabling it to sequence actions and extend its capabilities through external resources. Decision making then integrates all of these preceding capabilities by evaluating multiple potential trajectories and selecting the optimal path. This chosen path is then executed through actions, which in turn influence the environment in terms of safety, user experience and more.

Taken together, evaluating agentic AI is essentially assessing how well the model performs across these core dimensions of synthetic cognitive intelligence, how these components are orchestrated to execute an action and what impact the resulting actions have in real-world contexts.

Challenges in evaluating LLM-powered agents

With access to tools and simulated cognitive functions, LLM-powered agents are beginning to interact with the real world, real settings and real users, and also address real needs. This shift significantly increases their potential impact.

Ambiguity in language-based agents

Unlike traditional software systems with well-defined inputs, these agents operate through natural language, which is inherently ambiguous, context-dependent and diverse. This introduces challenges in both input interpretation and task specification, as instructions may be fuzzy, under-specified, open to multiple interpretation or unclear in reflecting users’ intent. For example, a WebArena (Zhou et al. 2024) benchmark study revealed a semantic error. The LLM-powered agent interpreted the instruction “assign the issue to myself” by literal string matching, assigning the task to the string “myself”, rather than understanding the intended referent of the person giving the instruction (Xu 2025).

Contextualized long-horizon tasks

The flexibility in natural language instructions, combined with the growing autonomy in agentic capabilities, is rapidly expanding agents’ task coverage to address more open-ended and long-horizon scenarios. These long-horizon tasks require agents to execute sequences of actions and carry forward context (prior instructions, user preferences or environmental details) to make appropriate decisions at later stages. For example, some studies (such as Chen 2025) have explored the use of a research agent to generate ideas, write proposals, carry out experiments and write papers in the domain of machine learning research. This demonstrates how agents can now attempt to tackle research, an area typically reserved for human experts. Emerging applications like this reflect the shift away from narrowly defined, single-step tasks toward context-sensitive, long-horizon scenarios.

Responsible use of agents

The increased capability introduces new risks, which stem from the agent’s downstream implications and applications (as conceptually demonstrated in Figure 2). Agents now have the ability to execute irreversible actions in sensitive areas, such as financial transactions, processing private data or making autonomous decisions for stakeholders. Compared to LLMs, LLM-powered agents are more likely to engage in harmful behaviors and are better at creating contents that are harder to detect (Tian et al. 2023, Yang et al. 2024). The integration of these tools into commercial products, such as Microsoft Copilot and GitHub’s Copilot, underscores the growing importance of addressing concerns around faithfulness, safety, reliability and ethical use of agentic tools.

Complexity in measuring success

The definition of success is shifting. It is no longer defined by a single, fixed outcome; instead, many tasks allow for multiple valid solutions and determining the optimal outcome often requires human validation, making it difficult to rely solely on automated evaluation. While automatic evaluation methods, including LLM-as-a-judge, are cost-effective and efficient, studies show that they can inflate leaderboard performance (e.g., Jimenez et al 2025 and Yu et al 2025 and their revisiting of the SWE-bench leaderboard). For instance, Tau-bench deliberately includes unsolvable challenges, yet unattempted tasks may still be recorded as successful. Similarly, substring matching is an unreliable measure of success, as agents can exploit it by outputting a large set of candidate strings to pass the check. These limitations highlight the importance of human-in-the-loop evaluation or validation. By incorporating human oversight and feedback at critical points, agents can be aligned more closely with complex human values and intentions, particularly in high-stakes or ambiguous situations and situations where subtle changes in social signals can result in significant change in social meaning.

As these agentic use cases enter enterprise industrial domains, the need for domain expert involvement in the evaluation and oversight is becoming increasingly apparent. For instance, LLM-powered agents can be orchestrated together to simulate real-world clinical diagnostic reasoning (Nori, 2025). This AI-assisted diagnostic tool advances diagnostic precision and lowers costs for primary care physicians. Their medical expertise is vital not only for using the tool but also for its ongoing evaluation and oversight.

The challenges in evaluating LLM-powered agents highlights the need for effective and diverse approaches. Developing these evaluation methods will be key to unlocking the agent’s full potential to ensure safe and reliable deployment.

Approaches in agentic evaluation

The unique challenges of evaluating LLM-powered agents highlight the need for more robust assessment methods. To tackle these challenges, we need to begin with refining the fundamental approach to agent evaluation.

Evaluation environment

A crucial starting point is selecting or creating the right evaluation environment, as the environment provides the foundational context for assessing an agent’s performance. Unlike traditional rule-based systems, LLM-powered agents require evaluation environments that are not only interactive and diverse, but also sufficiently controllable to simulate real-world conditions at scale. Depending on the level of control, these environments can be categorized into three main types:

- Self-hosted simulated sandbox: A fully controlled environment that closely mirrors real-world situations while eliminating the risk of irreversible actions. This setup is particularly valuable during the early stages of development, as it ensures safety and reproducibility.

- Client-specific replicated environments: A mirrored copy of a client’s operational setup, which typically uses synthetic user accounts and data. This allows agents to be tested under realistic conditions without impacting live production systems.

- Deployed environments with live protocols: This involves a direct evaluation of the production model via model context protocol (MCP) and other communication protocols. While it enables end-to-end validation, it necessitates careful safeguards to prevent unintended consequences.

Evaluation task

While these environments establish the conditions for evaluation, the focus of what is being measured has also shifted alongside advances in the capabilities of LLM-powered agents. Initially, evaluation centered on capabilities like GUI navigation or browser-based tasks. Today, the focus is on assessing API interactions in long-horizon tasks that simulate real-world problems within specific domains. This reflects the operational reality of modern agentic systems. At a high level, current evaluation frameworks examine whether agents can generate valid API calls with the correct arguments, execute those calls successfully and appropriately integrate the resulting outputs into their planning and tool-use workflows.

This marks a departure from earlier benchmarks that focused on stateless, short-horizon tasks in simplified environments (e.g., WebShop 2022, Mind2Web 2023). Modern benchmarks now incorporate rich content, interactive settings and long-horizon tasks (e.g., WebArena 2023, AppWorld 2024, Tau-Bench 2024, CVE-Bench 2025). These tasks challenge agents to do more than just determine when an API call is necessary; they must also identify the correct API to address a user’s intent, interpret and apply API documentation correctly and resolve ambiguous or under-specified user instructions, such as unclear or conflicting goals.

Evaluation metrics for LLM-powered agents

Overall performance metrics

Similar to many other machine learning models, robustness and consistency are core to any agent's development. Robustness evaluates the agent’s ability to generalize across unseen domains, tasks and applications. Consistent failure in a given domain may indicate a need for a domain expert to identify gaps in the agent's reasoning or tooling. Consistency ensures that evaluation outcomes remain stable over time, rather than fluctuating unpredictably.

Current evaluation benchmarks, such as Tau-Bench, typically emphasize the achievement of a final end-goal. However, evaluating intermediate factors is equally important. It helps assess whether the agent selects efficient step-wise actions that enhance user experience, thereby achieving step-wise optimality. It can also help detect high-stakes errors that could have a significant impact on user trust and satisfaction.

Component-based metrics

To systematically evaluate the intelligence of LLM-powered agent systems, we can refer to the conceptual model of agent capabilities shown in Figure 2, anchoring evaluation criteria to its specific components in general intelligence (see Figure 3). Each component has distinct evaluation targets and error modes, collectively providing a comprehensive and hierarchical understanding of agent performance.

Figure 3. Evaluation metrics mapped to the key components of an agent’s general intelligence

Figure 3. Evaluation metrics mapped to the key components of an agent’s general intelligenceContextual reasoning

Early evaluation critically assesses reasoning capabilities, focusing on the agent’s accurate interpretation of the user’s intended goal, self-reflection for accuracy and the integration and perception of long and short-term memory. This includes:

- Evaluating whether the agent correctly captures user requests that may contain multiple sub-requests;

- Whether it can detect and correct its own reasoning errors and

- Whether it effectively retrieves and integrates information from short-term memory (e.g., prior dialogue context), perception inputs (e.g., sensor data) and long-term memory sources (e.g., databases, corpora, documentation).

Failures at this stage often have cascading effects on later components.

Plan and tool quality

At the planning stage, the agent is tasked with generating a sequence of actions to achieve the end goal. Evaluation here includes:

- The validity of the plan (how many generated plans are logically and operationally valid);

- Planning efficiency (how many trials the agent requires before producing a valid plan) and

- Plan diversity (does the agent propose multiple viable alternatives, enabling better selection or fallback strategies).

These aspects assess LLM-powered agents' creativity and efficiency in problem-solving.

Reasoning and planning are critical for enabling an agent to determine not only when to make an API call, but also why it should be made. Since API calls are a primary form of tool use, evaluating their execution provides helpful insight into the agent’s reliability and its ability to integrate with external systems. This evaluation considers whether the agent selects the appropriate tool for the intended subtask, whether the calls are made with valid arguments rather than incorrect, hallucinated or incomplete parameters and how often the agent attempts to invoke non-existent or incompatible functions. Taken together, these assessments help reveal weaknesses in the agent’s reasoning process and its capacity to plan and execute interactions with APIs effectively.

Goal trajectories

At the decision-making stage, the agent chooses which action to execute among several possible alternatives. This stage builds directly on reasoning and planning: If the agent has misunderstood the user’s intent, retrieved incorrect information or generated weak plans, those shortcomings will cascade into poor decisions. Evaluation therefore centers on whether the chosen trajectory is effective and optimal in reaching the goal. An optimal trajectory also means it is cost-aware, which involves examining how many steps are required to complete the task and the associated computational expenses. Latency is another factor that greatly affects user experience, as certain actions may be disproportionately time-consuming and require optimization to maintain responsiveness. Finally, interruptibility is assessed by determining whether the agent’s action sequence can be adjusted by a human or an expert in the loop, and at what points such intervention is feasible. Together, these considerations have a direct impact on user experience, particularly in time-sensitive or resource-constrained environments where fast, reliable and cost-effective trajectories are critical for users and stakeholders.

Execution and coordination

After making the decision, the agent translates its selected plan into concrete operations within the environment during the action execution stage. Whereas decision-making determines what action to take, execution focuses on how that action is carried out. Even when the agent has chosen the correct plan and identified the appropriate tools, errors may still arise if the execution is faulty. For instance, an agent may correctly plan to return all the items in an order but then only execute the cancellation of one item. Alternatively, an agent might fail to orchestrate multiple tools effectively, such as correctly planning to reschedule a delivery by first checking the customer’s availability in a calendar tool and then using a separate scheduling tool but failing to perform the second step after receiving the customer’s information.

Evaluation at this level therefore examines both the correctness of individual actions and the coordination across dependent tools. In practice, this often requires assessing functionality at two levels:

- Whether each tool performs as intended when called independently.

- Whether the tools are orchestrated coherently to achieve the broader task. As multi-agent systems become more prevalent, this orchestration extends beyond tool chaining to include collaboration among multiple agents.

Here, execution must be evaluated not only for accuracy but also for efficiency (for example, deciding whether subtasks are best carried out sequentially or in parallel). Strong execution validates upstream processes, while failures often reveal hidden weaknesses in earlier stages that were not apparent during evaluation in isolation.

Safety and ethics

Once executable actions extend beyond simulation and begin to affect the real users and real environment, safety becomes vital. Execution errors that might appear minor in a sandbox can carry significant consequences when applied to production environments, real users or real-world systems. For example, an incorrect API call in a simulated environment may simply return an error message, but the same action in a live financial system could result in an unintended transaction or, in a healthcare setting, an incorrect record update. Commonly seen vulnerabilities include web browsing tools that expose the agent to unsafe or malicious content, automated code execution modules that may introduce security breaches and write operations that risk changing files or databases without sufficient supervision.

Evaluating safety therefore requires more than detecting functional correctness; it involves assessing risk awareness, mitigation strategies and human-in-the-loop safeguards. Key considerations include:

- Whether the agent can recognize safety concerns, especially those that involve high-stakes errors;

- Whether it requests confirmation, authentication or a privileged role before executing potentially irreversible actions and

- Whether robust fallback mechanisms exist in the event of tool failure or malicious input.

Seen in the broader evaluation chain, safety represents the culmination of previously discussed key components. A lapse at any earlier stage (e.g., misinterpreted intent, flawed planning, suboptimal decision-making or faulty execution) can compound into unsafe outcomes. Traditional systematic stress-testing in LLMs has primarily focused on language-based outputs or malicious audio and video inputs/outputs in multimodal models. The risks addressed by this testing typically include misinformation, bias and hate speech, privacy breaches and unprofessional advice. However, the emerging paradigm of LLM-powered agents introduces new vulnerabilities related to tool use, requiring novel approaches to red teaming (e.g., CVE-Bench). For instance, evaluators need to assess whether an LLM possesses effective defensive mechanisms to refuse and block the execution of ill-intentioned function calls. Red teaming across function calls and domains is therefore a critical step in developing trustworthy LLM-powered agent systems for real-world deployment.

By decomposing evaluation across the hierarchical key components of an agent’s specific general intelligence, we can more precisely identify strengths and weaknesses in LLM-powered agents. Each stage builds on the previous one:

- Accurate reasoning supports effective planning;

- Sound planning enables optimal decisions;

- Robust decisions translate into reliable execution and

- Safe execution ultimately determines the system’s trustworthiness in real-world deployment and user adoption.

This component-level hierarchical framework not only enhances diagnostic power, but also supports more targeted improvement cycles in deployments.

Future directions

Evaluating agents across these components provides a structured lens on current capabilities and limitations. Yet, understanding where agents stand today naturally leads to the question of what comes next?

While LLMs and LLM-powered agents frequently set a new record in benchmark leaderboards, their impact on everyday workflow remains limited as of today. This points to a crucial gap: What outcome do we ultimately want these agents to deliver? Moving forward, agent development and evaluation should extend beyond artificial benchmarks towards environments that better reflect real-world contexts. Such a shift is essential to ensure that advances in agent performance translate well into tangible and practical value.

Proactive agents

Current LLM-powered agents remain largely reactive, responding to explicit user instructions or environmental inputs rather than anticipating needs. A key direction for advancement is the development of proactive agents that are capable of initiating helpful actions, predicting user requirements and adjusting strategies before being prompted. For example, an agent managing enterprise workflows could proactively suggest process optimizations based on historical usage patterns rather than waiting for a direct query.

Human-agent and agent-agent collaboration

While task success rate remains a critical benchmark, future evaluations also need to capture collaborative dimensions. Effective agents should not only succeed in completing tasks independently, but also demonstrate the ability to work smoothly alongside humans and other agents. This requires assessing how well an agent negotiates roles, requests clarification or authentication when needed, and adapts to cooperative workflows rather than optimizing solely for independent task completion.

Communication and cognition aware agents

Established theories in communication, pragmatics and cognition provide valuable frameworks for improving and evaluating human-agent dialogue. Communication theory, such as Clark and Brennan’s grounding model (1991), pragmatics, including Grice’s Cooperative Principle and Maxims (1975), and cognitive perspectives, like Theory of Mind (Premack and Premack, 1995), all offer inspiration into how agents should interact with users. Early findings show that current agents often overcompensate — either by being overly collaborative, agreeing too readily in ways that reduce efficiency or by acting too autonomously, taking unconfirmed actions that undermine trust. A central challenge is determining the appropriate level of information flow between agent and user and training models to recognize both contextual cues and the norms that shape communication. Developing balanced strategies along these dimensions will be critical to achieving interactions that are not only effective but also trustworthy in real-world applications.

Collective intelligence in agent-agent interaction

The emergence of multi-agent systems introduces an additional new layer of evaluation. Beyond simply assessing individual task performance, it becomes necessary to evaluate their collective intelligence, i.e., the ability of agents to combine their reasoning to achieve outcomes that a single agent could not. This includes assessing how well they self-organize without central oversight (decentralized control) and whether they can collaborate productively in parallel. It is also important to determine if dependencies between agents lead to inefficiencies and bottlenecks, which can hinder the overall orchestration and performance of the system.

Advancing evaluation across these axes will enable the design of agentic systems that are not only accurate and efficient, but also proactive, collaborative, socially attuned and better equipped to meet the demands, diversity and complexities of real-world environments.

Conclusion

Grounding the evaluation of LLM-powered agents in historical theories of human and agent intelligence provides a robust framework for assessment. By decomposing performance across cognitive components, i.e., perception, reasoning, planning, decision-making and execution, we can diagnose strengths and weaknesses with precision. Crucially, the evolving landscape of agentic AI requires a continued emphasis on human involvement. Domain expertise is essential for defining open-ended success criteria, interpreting ambiguous natural language instructions and subtle changes in social signals, and safeguarding real-world deployments. Looking forward, human supervision and collaboration will be essential to guide agents toward proactive, collaborative and socially-aligned behaviors. In doing so, we can ensure that these LLM-powered agents advance in accuracy and efficiency and, more importantly, in trustworthiness, safety and alignment with human values.

References

Arvey, R.D., Bouchard, T.J., Carroll, J.B., Cattell, R.B., Cohen, D.B., Dawis, R.V. and Willerman, L., 1994. Mainstream science on intelligence. Wall Street Journal, 13(1), pp.18-25.

Brennan, S.E., 2014. The grounding problem in conversations with and through computers. In Social and cognitive approaches to interpersonal communication (pp. 201-225). Psychology Press.

Carroll, J. B. 1993. Human Cognitive Abilities: A Survey of Factor-Analytic Studies. New York: Cambridge University Press.

Chen, H., Xiong, M., Lu, Y., Han, W., Deng, A., He, Y., Wu, J., Li, Y., Liu, Y. and Hooi, B., 2025. MLR-Bench: Evaluating AI Agents on Open-Ended Machine Learning Research. arXiv preprint arXiv:2505.19955.

Deng, X., Gu, Y., Zheng, B., Chen, S., Stevens, S., Wang, B., Sun, H. and Su, Y., 2023. Mind2web: Towards a generalist agent for the web. Advances in Neural Information Processing Systems, 36, pp.28091-28114.

Grice, H. P. (1975) 'Logic and conversation'. In P. Cole and J. Morgan (eds) Studies in Syntax and Semantics III: Speech Acts, New York: Academic Press, pp. 183-98.

Jimenez, C.E., Yang, J., Wettig, A., Yao, S., Pei, K., Press, O. and Narasimhan, K., 2023. Swe-bench: Can language models resolve real-world github issues?. arXiv preprint arXiv:2310.06770.

Nori, H., Daswani, M., Kelly, C., Lundberg, S., Ribeiro, M.T., Wilson, M., Liu, X., Sounderajah, V., Carlson, J., Lungren, M.P. and Gross, B., 2025. Sequential Diagnosis with Language Models. arXiv preprint arXiv:2506.22405.

Premack, D., and Premack, A. J. (1995). “Origins of human social competence” in The cognitive neurosciences. ed. M. S. Gazzaniga (Cambridge, MA: MIT Press), 205–218.

Spearman, C. (1904). 'General intelligence,' objectively determined and measured. The American Journal of Psychology, 15(2), 201–293.

Stuartl, R. and Peter, N., 2021. Artificial intelligence: a modern approach. Artificial Intelligence: A Modern Approach.

Tian, Y., Yang, X., Zhang, J., Dong, Y. and Su, H., 2024. Evil geniuses: Delving into the safety of llm-based agents. arXiv preprint arXiv:2311.11855.

Trivedi, H., Khot, T., Hartmann, M., Manku, R., Dong, V., Li, E., Gupta, S., Sabharwal, A. and Balasubramanian, N., 2024. Appworld: A controllable world of apps and people for benchmarking interactive coding agents. arXiv preprint arXiv:2407.18901.

Xu, F. 2024. Language Model as Agents. Talk presented at CMU Agentic Workshop 2024. Available at: https://phontron.com/class/anlp2024/assets/slides/anlp-20-agents.pdf [Accessed 3 Aug 2025].

Yang, W., Bi, X., Lin, Y., Chen, S., Zhou, J. and Sun, X., 2024. Watch out for your agents! investigating backdoor threats to llm-based agents. Advances in Neural Information Processing Systems, 37, pp.100938-100964.

Yao, S., Shinn, N., Razavi, P. and Narasimhan, K., 2024. $\tau$-bench: A Benchmark for Tool-Agent-User Interaction in Real-World Domains. arXiv preprint arXiv:2406.12045.

Yu, B., Zhu, Y., He, P. and Kang, D., 2025. Utboost: Rigorous evaluation of coding agents on swe-bench. arXiv preprint arXiv:2506.09289.

Zhou, S., Xu, F.F., Zhu, H., Zhou, X., Lo, R., Sridhar, A., Cheng, X., Ou, T., Bisk, Y., Fried, D. and Alon, U., 2023. Webarena: A realistic web environment for building autonomous agents. arXiv preprint arXiv:2307.13854.

Zhou, S., Xu, F.F., Zhu, H., Zhou, X., Lo, R., Sridhar, A., Cheng, X., Ou, T., Bisk, Y., Fried, D. and Alon, U., 2023. Webarena: A realistic web environment for building autonomous agents. arXiv preprint arXiv:2307.13854.

Zhu, Y., Kellermann, A., Bowman, D., Li, P., Gupta, A., Danda, A., Fang, R., Jensen, C., Ihli, E., Benn, J. and Geronimo, J., 2025. CVE-Bench: A Benchmark for AI Agents' Ability to Exploit Real-World Web Application Vulnerabilities. arXiv preprint arXiv:2503.17332.