Agentic framework showdown: We tested eight AI agent frameworks to find which perform best

Luis Felipe Zeni

Staff Data Scientist

Christopher Frenchi

AI Research Engineer

Daniel Michael

Staff Software Engineer

Nish Tahir

Principal Software Engineer

In our recent blog on orchestrating multi-agent AI systems, we explored the power of multiple specialized AI agents collaborating with an orchestrating agent to solve complex tasks. Of course, we must build multiple AI agents before we can orchestrate them. So, we must ask ourselves, what’s the best way to build all those agents?

Pure Python offers us flexibility and control. But agentic frameworks offer us different advantages. By harnessing the design architecture of an agentic framework, we enforce team alignment on best practices, especially for larger or more complex projects and workflows.

By aligning developers behind a common design pattern, these agentic frameworks enhance our focus to solve our specific problem. They reduce complexity and streamline decision-making as we build our agents.

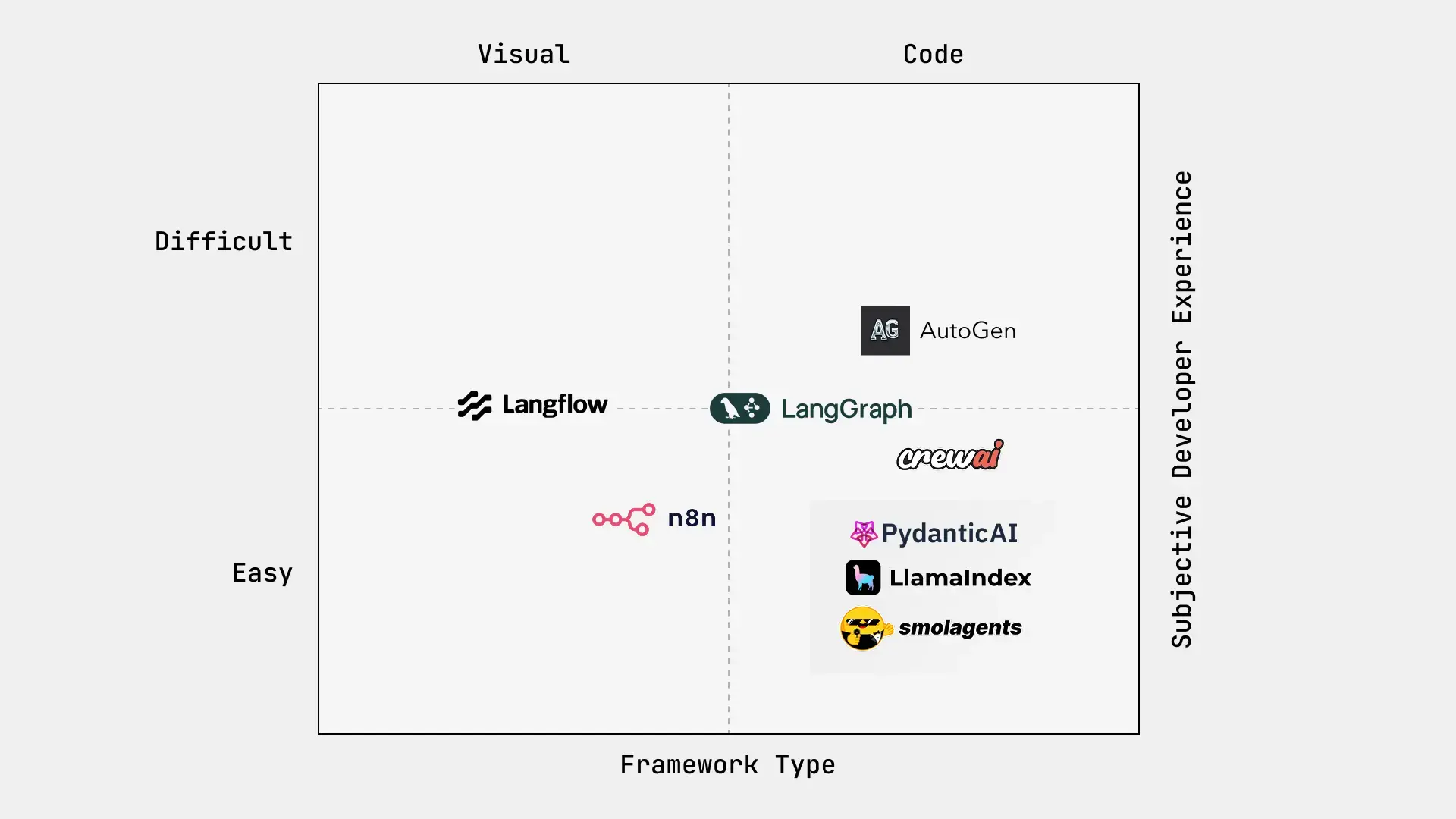

To find the best agentic framework for our client projects, we tested eight of the most promising AI agent frameworks currently available, some relative newborns at less than six months from their first release:

We evaluated the subjective experience of building AI agents with each framework, detailing our methodology and findings below. We also include some key considerations AI practitioners should know when using an agentic framework.

Methodology: How we chose and evaluated 8 agentic frameworks

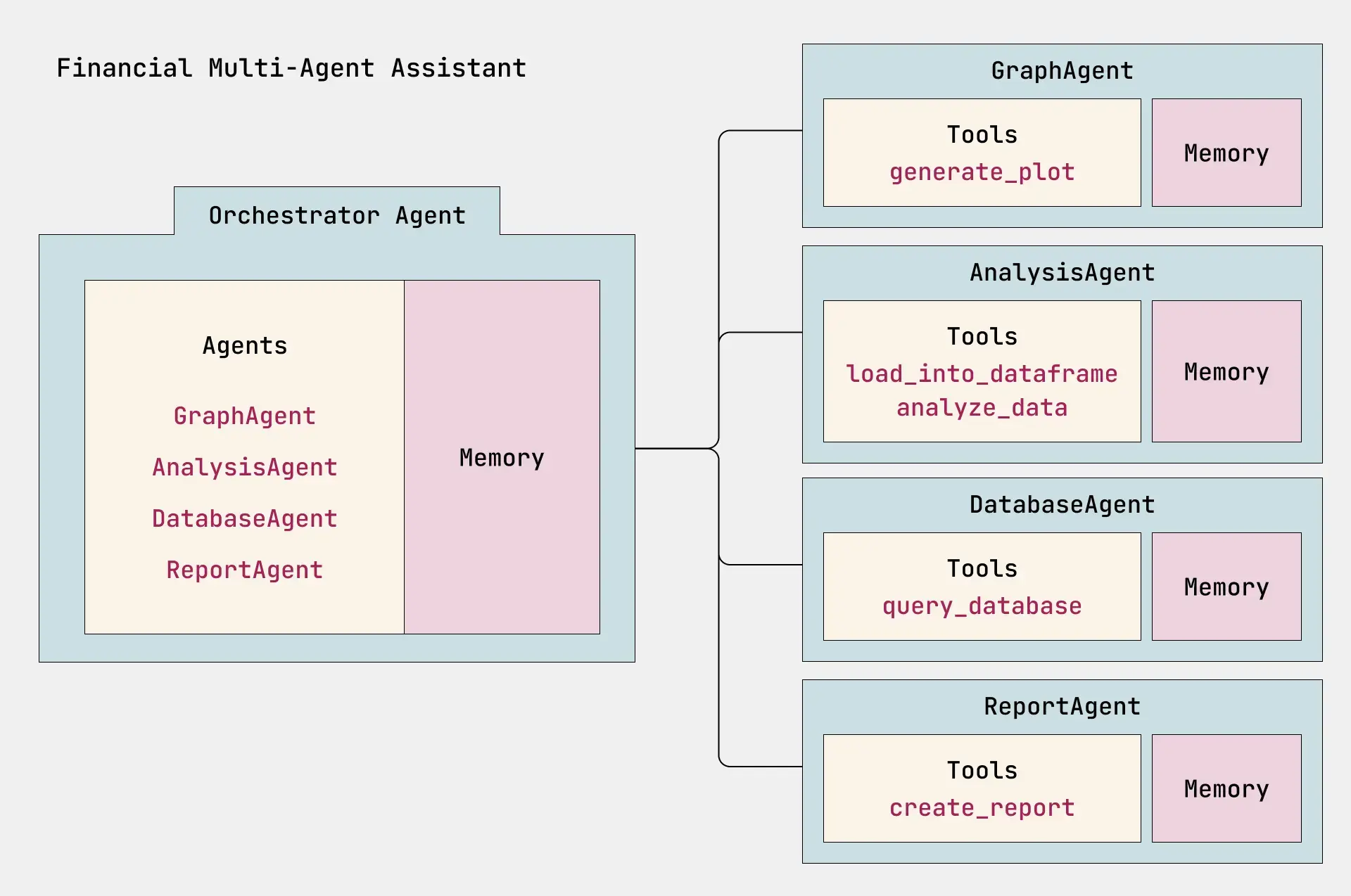

To make a fair comparison, we applied each framework to the same financial multi-agent assistant workflow, detailed in the graphic below. We chose our eight frameworks because they all captured the nuances of our agents (i.e., multi-agent, orchestration, tool integration and memory).

As we see above, the financial multi-agent workflow above has five types of agents, each one highly specialized in one class of task:

- Orchestrator Agent: Decides which agent to call and in what order.

- Database Agent: Queries and retrieves data from a database.

- Analysis Agent: Analyzes the returned data.

- Graph Agent: Generates visualizations based on analysis.

- Report Agent: Creates a report document to share the insights from analysis.

Each of these agents has its own independent memory and tools. The Orchestrator Agent plans the sequence of subtasks needed to complete the full task that the user requested, using the specialized agents as tools to complete each of the subtasks.

To evaluate each agentic framework, we selected three major criteria that we think are the most relevant in selecting a framework:

- Developer experience: This evaluates usability factors such as learning curve and how easy it felt to implement modifications.

- Features: This reflects the quality of key features around agent customization and orchestration.

- Maturity: This tells us how battle-tested the agentic framework is, an important consideration if you plan to use the framework in production.

Now let’s see how the evaluations went.

Results of evaluating each agentic framework

We implemented the same agentic system in each framework. The developer who implemented the project then provided feedback on their experience, reflected in the scoring matrix below.

Our developers’ experience found that overall, Smolagents was by far the easiest to set up and use. Writing with the Smolagents framework was very close to writing an agent in pure Python and abstracted just the right amount of repetition to feel very comfortable. Tools are defined with @tools and agents and orchestration feels very intuitive.

Autogen, on the other hand, felt heavy. LangGraph required graph theory to get a good feel for the process, and CrewAI has both tasks and crews to think about when developing. To create agents, tools/functions, and orchestration took a lot more code and thought to get the same results.

All of the code-focused frameworks allowed for deep agent customization, while the two visual frameworks were a bit difficult to get granular with. The major call out here was n8n’s visual approach to creating tools in Python, which can not currently pull in Python libraries yet and will rely on agentic tools to be used behind another services API call.

The 3 agentic frameworks we recommend

Based on our experimentation and analysis, we highly recommend using PydanticAI, Smolagents or LlamaIndex for building autonomous AI agents. If you’re looking for a framework that has been around for a bit and battle-tested, we recommend LlamaIndex for production-ready apps.

Of course, the “best” framework will vary depending on your specific project requirements, team expertise and use cases. We encourage AI practitioners to consider these results as a starting point for their own evaluations, taking into account their unique needs and constraints when selecting an AI agent framework for their projects.

Last, though the results of our comparison showed the best framework for most use cases will be LlamaIndex, it’s worth watching the recent contenders of PydanticAI and Smolagents. As they mature, these two frameworks could become what many teams use for their next projects.

If you need help developing your agentic AI systems, we’re happy to help. Our experience spans AI strategy and governance, generative AI experiences, voice and beyond. Learn more by exploring our Data & AI Solutions.